So in this tutorial, we are going learn about how to get Historical stock data using pandas_datareader from yahoo finance along with basics of pandas dataframe and moving average. We will also learn how to store the obtained data in a file, cache, cleaning the data by adding missing rows and at last how to visualise it by making a graph.

Installing Requirements

We need to install a few libraries before getting started.pip install pandas_datareaderpip install pandaspip install matplotlibpip install requests_cache

Well if you don’t know about pandas or dataframes. Need not to worry. We will discuss them too.

Terminology

I will be using few technical terms in this tutorial so if you don’t understand you can refer here.

- Dataframe: Just imagine data in an excel sheet with columns and rows. It’s somewhat similar to that. It stores data in the form of an object and has many predefined functions to perform various operations.

- Ticker: Sometimes known as a “stock” or “sock code”, it is a unique identifier for each companies name. Every company has its own unique ticker. Like AAPL for Apple, FB for Facebook, AMZN for Amazon, etc.

Extracting data from yahoo with Pandas

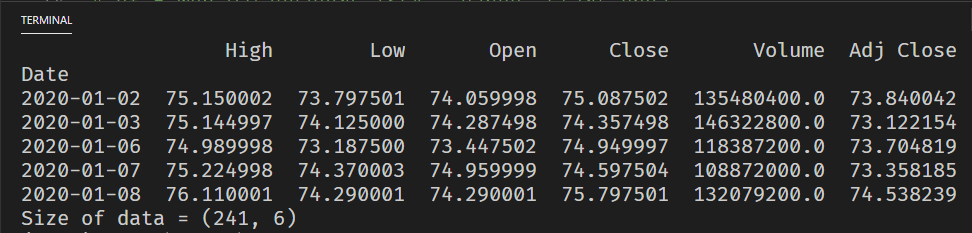

We will be using Yahoo Finance for obtaining stock data. Firstly we will import pandas_datareader.data then send a request to yahoo finance with ticker , start and end date. The “pandas_datareader” module is a useful module that abstracts extracting web data very easily. You don’t have to bother with “requests” or “urllib” and parse the HTML data, this is all done for you! From the module in response we will get a dataframe containing our data in rows and columns . The columns are basically High , Low , Open , Close , Volume and Adj Close.

import pandas_datareader.data as web

import datetime as dt

ticker = "AAPL" # This is Apple's ticker

start = dt.datetime(2020,1,1) # Starting date in format (year,month,day)

end = dt.datetime(2020,12,1) # Ending date

df = web.DataReader(ticker,"yahoo",start,end)

print(df.head()) # Prints first 5 columns of data

print(df.shape) # Prints the number of rows and columns

df = web.DataReader(ticker,"yahoo",start,end)- This stores the dataframe obtained in the variable

df. The first value passed here is ticker i.e. “AAPL” , second is the source name . As we are using yahoo finance here so it should be “yahoo”. Then the end and start date (in the form of datetime). You can see other data sources here.

- This stores the dataframe obtained in the variable

print(df.head())- It prints the first 5 columns of the data obtained.

print(df.shape)- Prints the rows and columns in the dataframe . As seen in output there are 241 rows and 6 columns

You can also use end = dt.date.today() to set end as today.

Managing data with Dataframes

Few commands which you can use to extract custom data you want from the dataframe obtained in the above code.

df.head(): To get first 5 columnsdf.tail(): To get last 5 columnsdf.head(<int>): To get first int no of columns . Like df.head(20) for first 20 columnsdf.tail(<int>): To get last int no. of columns.df.shape: To get columns and rowsdf.columns: To get no. of columnsdf.loc["2020-01-06"]: To get data of exact date

How to store dataframe data to a file

After obtaining the data next task is to store it as the historical data is not going to change. It make our script fast as we can re-use the stored data instead of making api calls.

Storing dataframe data in a file using pickle

import pandas_datareader.data as web

import datetime as dt

ticker = "AAPL"

start = dt.datetime(2020,1,1)

end = dt.datetime(2020,12,1)

df = web.DataReader(ticker,"yahoo",start,end)

# Now storing the data in csv file

df.to_csv("data.csv")You can also use this to dump the object in a binary file.df.to_pickle("data.bin") : Dumps in binary format.

Reading dataframe data from the file using pickle

Reading the data from file is as easy as storing it.

import pandas as pd

df = pd.read_csv("data.csv",parse_dates=True,index_col=0)

# or

df = pd.read_pickle("data.bin")Caching the dataframe data to improve speed

DataReader also supports caching of data. Sending requests again and again may slow down your code and can also result in the ban of your IP.

You can create cache using request_cache by passing a request_cache.Session to DataReader using session parameter.

import pandas_datareader.data as web

import datetime as dt

import requests_cache

ticker = "AAPL"

start = dt.datetime(2020,1,1)

end = dt.date.today()

expiry = dt.timedelta(hours=2)

session = requests_cache.CachedSession(cache_name="cache",backend="sqlite",expire_after=expiry)

df = web.DataReader(ticker,"yahoo",start,end,session=session)When you will run this code from the second time onwards you will observe that the program runs very fast. The reason is it uses the cache to respond your requests. The cache is valid for the time given in expire_after parameter. In our case, it’s 2 hours.

Operating on pandas dataframe data

Now it’s time to perform some operations on the data and make it more appealing and meaningful. Starting with Cleaning the data.

Cleaning datareader data

If you clearly observe the data given below you will see that the data of some weekdays are missing. (Note: Stockmarket are closed on weekends)

Data of 20 Jan 2020 (Monday) is missing.

Like it there are many weekday’s data missing in the whole dataframe and if we perform any operation on this dataframe it won’t be perfect. So to solve this issue we need to create a column consisting of each weekdays then replace our previous dates column with this new one. This isn’t the only approach to handle “gap data” or “missing data”, but it is a simple method. Other approaches to take are to average the value between the missing row.

import pandas as pd

import datetime as dt

start = dt.datetime(2020,1,2)

end = dt.date.today()

df = pd.read_pickle("hello.bin")

alldays = pd.date_range(start=start, end=end, freq='B')

df = df.reindex(alldays)

df = df.fillna(method='ffill')alldays = pd.date_range(start=start, end=end, freq='B')- This creates a DatetimeIndex (think it as a column) of all weekdays between

startandend.

- This creates a DatetimeIndex (think it as a column) of all weekdays between

df = df.reindex(alldays)- This line replaces our previous Dates column with the new one that has all dates. All the dates that dont have a value are set as NaN meaning Not a Number.

- df = df.fillna(method=”ffill”)

- This replaces all the empty NaN data with the data of nearest date.

After performing this if we look at our new data we will see that. 20 Jan has been added and it’s data has been copied from 17 Jan.

Creating Moving Average with Pandas Dataframe

What is the moving average? Suppose if I say a moving average of 10 days data from today’s date of 2020-12-20. That means to get the average of all the data between 2020-12-11 to 2020-12-20. Then on 2020-12-21 the moving average would the be the average of all the data between 2020-12-12 to 2020-12-21 (the “window” then “moves” to the last 10 days). In stock analysis it helps to smoothen out the constant day to day changes to see if there is a general trending of upward or downward and to eliminate any fluctuations.

In this example we will create and a new column in our dataframe which will contain the moving average of 30 days.

import pandas as pd

df = pd.read_pickle("hello.bin")

df["ma30"] = df["Adj Close"].rolling(window=30,min_periods=0).mean()

print(df.tail())

As you can see it created a new column in our dataframe as ma30 which has moving average of 30 days. We used used rolling() function for it.

window=30: The no of Days in moving averagemin_periods=0: We used this option parameter to make sure our first 29 values aren’t empty . Because as they don’t have 30 days for first 29 values to take average. So this parameter makes sure that if they have less days than 30. No problem take average of the available days before it . Hence the moving average of first day is itself , the second day is average of first and second and so on till 30.

Visualize data with matplotlib

Looking at large texts doesn’t gives us much information. So let’s convert the obtained data to a graph. (Assuming the data is already stored in the file data.bin in binary format)

import pandas as pd

import matplotlib.pyplot as plt

df = pd.read_pickle("hello.bin")

df["Open"].plot()

df["Adj Close"].plot()

plt.legend()

plt.show()

Lets add some more code to improve the graph. Here we will be creating 2 sub-graphs embedded in one figure . The top one will be a line graph representing the Adj Close and below one is a filled polygon graph representing the volume. We will be using some advanced features of matplotlib.

subplot2grid is used to create graphs based on grid formats . Here we are creating a grid of 6 rows and 1 columns in which the first sub-graph will occupy 5 rows and second one will occupy 1 row. The size of first graph will be 5 rowspan and second’s will be 1 rowspan.

import pandas as pd

import matplotlib.pyplot as plt

df = pd.read_pickle("hello.bin")

fig = plt.subplots(figsize=(10,5))

ax1 = plt.subplot2grid((6,1),(0,0),rowspan=5,colspan=1)

plt.grid()

ax2 = plt.subplot2grid((6,1),(5,0),rowspan=1,colspan=1,sharex=ax1)

ax1.plot(df.index,df["Adj Close"])

ax2.fill_between(df.index,df["Volume"],0)

plt.show()

fig = plt.subplots(figsize=(10,5))- Sets the window size. You can obiviously scroll the borders but initialising the window is recommended.

ax1 = plt.subplot2grid((6,1),(0,0),rowspan=5,colspan=1)- Creates space for first graph . (6,1) is the gridsize and (0,0) is the position from where the figure of this graph will start which is obiviously start point.

ax2 = plt.subplot2grid((6,1),(5,0),rowspan=1,colspan=1,sharex=ax1)- Creates space for second graph . (6,1) is the gridsize which will be same as given in the first one and (5,0) is the position which means from 5th row that is below the first graph.

ax1.plot(df.index,df["Adj Close"])- Plots the

df.indexi.e the Date on the x axis and"Adj Close"data on the y axis in the form of line graph .

- Plots the

ax2.fill_between(df.index,df["Volume"],0)- Plots the

df.indexi.e. the Date on x axis and"Volume"data on y axis in the form of filled polygons.

- Plots the

Conclusion

There are many types of analysis you can make on stock data such as: analysing if current value is at a week/month/annual high, if we have a trending upwards compared to the sector or the market as a whole, if the trend is due to announcements, and many more things. Here we’ve covered the very first and important step of extracting data and visualizing this which will be a great foundation to begin with.

Code away!

Get Notified Automatically Of New Articles

Error SendFox Connection:

Good article. As a C# programmer, I am very impressed with Python. The install requests_cache had a typo at the beginning of the article, request_cache was not found.

cheers Fernando – fixed now. Python is super flexible and has been my go to language for quite sometime. I personally started out on C -> C++ -> Perl and found Python at the time not as compelling as Perl.. but as the pypi libraries grew and seeing more and more production systems under Python you just could not ignore that capabilities 🙂

This latest news from Guido is quite exciting as well to address one of the bigger weaknesses of Python:

https://www.zdnet.com/article/python-programming-we-want-to-make-the-language-twice-as-fast-says-its-creator/